MISP Statistics

The MISP API includes a couple of features that you can use to report on the type of data stored in the database. For example the User statistics or Attribute statistics give a pretty good overview. Unfortunately, as of now it’s not possible to limit the output of these functions to a specific timeframe. For my use case I’d like to report on the MISP data statistics for the last month. The information that I want to include is

- How many new or updated events?

- How many new or updated attributes?

- How many new or updated attributes with IDS flag?

- The category of the attributes

- TLP-coverage

PyMISP example module

I wrote a PyMISP script that does all of the above and more. The script fetches the event and attribute data for a given timeframe and then reports the statistics. The report is send via e-mail and the data is attached as individual CSV files.

The script can be found in the PyMISP repository stats_report.py. The configuration of the script is inline in the Main module. If you want to receive the reports by e-mail you will have to change

smtp_from

smtp_to

smtp_server

The script should be run from cron and accepts these parameters

- -t : the timeframe, typically you’ll use ‘-t 30d’

- -e : include the MISP events titles in the output;

- -m : mail the report or only have the output to screen;

A typical use from cron would then be

*/5 * * * * mispuser /usr/bin/python3 /home/mispuser/PyMISP/examples/stats_report.py -t 30d -m -e

Refactor the output

Part (or in fact the quality) of the statistics rely on how the contributors have added the data. For instances that receive events from different sources this can result in a lack of consistency, or even quality of data. This script includes some basic logic to work with this but you might have to tune this to your environment.

Event and attribute statistics

The first part of the statistics should normally be usable by all environments. Note that if you use your reporting to people outside your organisation you should indicate that the data concerns new or updated events.

MISP Report 2019-07-12 23:53:12 for last 30d on https://XXXXX/

-------------------------------------------------------------------------------

New or updated events: 658

New or updated attributes: 24834

New or updated attributes with IDS flag: 14484

Total events: 60293

Total attributes: 7382714

Total users: 2519

Total orgs: 1208

Total correlation: 8521911

Total proposals: 77595

Items to include in your report based on the output of this script are

- Evolution of number of events and attributes over time

- Evolution of number attributes with IDS flag over time

Attribute category

The next part that’s interesting to report is the number of attributes per category. According to the MISP core format RFC, the category represents the intent of what the attribute is describing as selected by the attribute creator.

Network activity 9530

Payload delivery 4963

Antivirus detection 3914

Financial fraud 3114

External analysis 1828

Artifacts dropped 694

...

If you report this information, then it’s useful to include an explanatory table for the different categories.

Antivirus detection: All the info about how the malware is detected by the antivirus products

Artifacts dropped: Any artifact (files, registry keys etc.) dropped by the malware or other modifications to the system

Attribution: Identification of the group, organisation, or country behind the attack

External analysis: Any other result from additional analysis of the malware like tools output

Financial fraud: Financial Fraud indicators

Internal reference: Reference used by the publishing party (e.g. ticket number)

Network activity: Information about network traffic generated by the malware

Other: Attributes that are not part of any other category or are meant to be used as a component in MISP objects in the future

Payload delivery: Information about how the malware is delivered

Payload installation: Info on where the malware gets installed in the system

Payload type: Information about the final payload(s)

Persistence mechanism: Mechanisms used by the malware to start at boot

Person: A human being - natural person

Social network: Social networks and platforms

Support Tool: Tools supporting analysis or detection of the event

Targeting data: Internal Attack Targeting and Compromise Information

Reporting the attribute types might only be useful if you report to a more technical audience.

TLP-codes

Reporting the TLP codes of the received events is useful to indicate if information was available for everyone or only for

restricted receivers. Note that the script tries to sanitise the different notations of the TLP codes by transforming everything to lower case and removing spaces. For example the notation of “TLP:White”, “TLP: White” and “tlp : white” should all result in “tlp:white”.

tlp:white 338

tlp:green 286

tlp:amber 7

tlp:red 0

MISP Galaxy

The use of the MISP Galaxy really depends on your sector. The next sections of the report dive deep into some categories of the MISP Galaxies but items you can report are for example

- misp-galaxy:banker : Banker malware

- misp-galaxy:financial-fraud : Financial fraud

- misp-galaxy:tool : Threat actors tooling

MISP Galaxy MITRE

Events which are added to the clusters starting ‘misp-galaxy:mitre’ are reported individually. This is good data to report how your threat intel feed covers the Mitre ATT&CK framework. As mentioned before, the quality of this data depends on the contributors.

misp-galaxy:mitre-enterprise-attack-intrusion-set="APT28 - G0007" 12

misp-galaxy:mitre-enterprise-attack-intrusion-set="Lazarus Group - G0032" 5

misp-galaxy:mitre-intrusion-set="APT28" 4

misp-galaxy:mitre-enterprise-attack-intrusion-set="MuddyWater - G0069" 3

misp-galaxy:mitre-enterprise-attack-attack-pattern="Spearphishing Attachment - T1193" 3

misp-galaxy:mitre-attack-pattern="Standard Application Layer Protocol - T1071" 3

misp-galaxy:mitre-attack-pattern="Spearphishing Attachment - T1193" 3

misp-galaxy:mitre-pre-attack-intrusion-set="APT28" 2

misp-galaxy:mitre-malware="AutoIt" 2

misp-galaxy:mitre-enterprise-attack-attack-patt

MISP Galaxy Threat Actor

Similar to the Mitre ATT&CK framework, the script will also report on the threat actors, if they have been added by the event contributors. This is also a great resource to report.

misp-galaxy:threat-actor="Sofacy" 24

misp-galaxy:threat-actor="Lazarus Group" 9

misp-galaxy:threat-actor="OilRig" 5

misp-galaxy:threat-actor="MuddyWater" 5

misp-galaxy:threat-actor="INDRIK SPIDER" 5

misp-galaxy:threat-actor="APT37" 5

Reporting failures

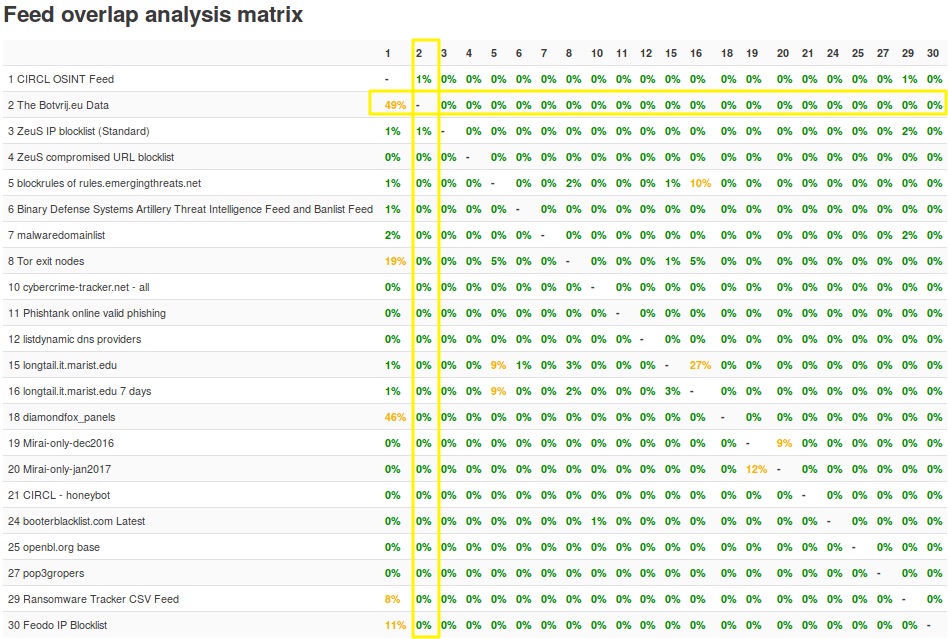

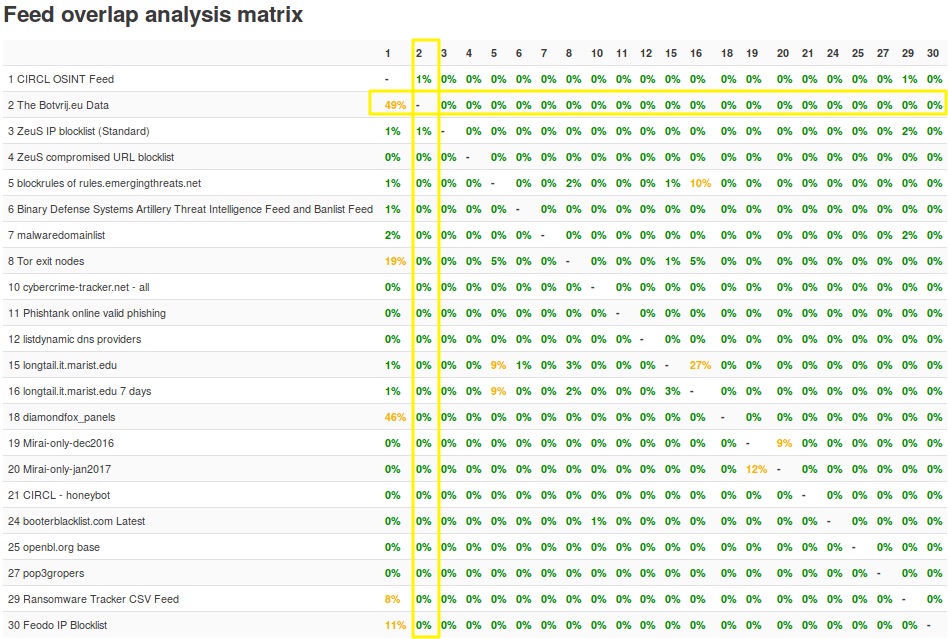

Do not get trapped into the “my instance has more indicators than yours”, eventually it’s the quality of the indicators that counts. Having recent and vouched (sightings isn’t included yet in the reporting) indicators is important. As a start, the MISP documentation provides you with a Feed overlap matrix.