Honeypots

I have been working with honeypots for a long time. I consider them one of the best sources for statistics and ongoing trend. Most importantly they give insight information on new exploit and attacker activity.

In the past I used my own set of tools to collect the information from different honeypots. The tools are available on GitHub cudeso-honeypot. I have an old blog post on ‘Using ELK as a dashboard for honeypots’.

Modern Honey Network

Since a couple of months I migrated some honeypots to Modern Honey Network from ThreatStream. It’s a much more “polished” setup than my collection of scripts.

MHN uses MongoDB as a storage back-end but it is fairly easy to get the data in Elasticsearch. I prefer the Elasticsearch, Logstash and Kibana setup because

- Kibana is an intuitive web interface;

- ELK has a powerful search engine;

- It is reliable, fast and extendable;

- The graphs (dashboard!) in Kibana are easy to setup.

The default install (from GitHub) of MHN uses older versions of Elasticsearch, Logstash and Kibana. Because I wanted to extend some of the features of MHN (basically the enrichment of the data via Logstash) I upgraded the ELK stack to

Elasticsearch 2.1.0 Logstash 1:1.5.5-1 Kibana 4.3.0

The upgrade was, beside some minor changes to the configuration files, a straightforward process. Unfortunately after the upgrade it was no longer possible to have Kibana map the data on world maps (the “geo”-feature).

Setting up a tile map (a geographic map) resulted in an error: No Compatible Fields: The “mhn-*” index pattern does not contain any of the following field types:” geo_point.

This issue is listed in the Elastic discussion forum.

Fixing the Kibana geo_point error for MHN

The problem is solved by submitting your own index template AND changing the way Logstash parses the MHN data.

Submit the Elasticsearch template

The first step that we have to take is stopping Logstash so that no new data is processed.

sudo supervisorctl stop logstash

Then we have to remove all the existing data. Note that you can apply new templates to existing indexes but this requires that you remove the data, apply the template to all the indexes and then re-import all the data. If you have daily indexes this becomes cumbersome. I decided to go with a new, empty, database of honeypot data.

curl -XDELETE 'http://localhost:9200/_all'

You should get

{"acknowledged":true}

Now upload the new template. Note that this template applies geo_point to source IP (src_ip) and destination IP (dest_ip). The source IP and destination IP (dest_ip) are set to the field type “IP”.

curl -XPUT http://localhost:9200/_template/mhn_per_index -d '

{

"template" : "mhn-*",

"mappings" : {

"event" : {

"properties": {

"dest_port": {"type": "long"},

"src_port": {"type": "long"},

"src_ip": {"type": "ip"},

"dest_ip": {"type": "ip"},

"src_ip_geo":{

"properties":{

"location":{"type":"geo_point"}

}

},

"dest_ip_geo":{

"properties":{

"location":{"type":"geo_point"}

}

}

}

}

}

}'

After submitting the command you should get

{"acknowledged":true}

Edit MHN Logstash configuration

Now we need to change the way Logstash parses the MHN data. I will also include some of the changes that are needed for having Logstash interact with the new Elasticsearch version (document_type, host-notation).

Open the MHN Logstash configuration file.

sudo vi /opt/logstash/mhn.conf

input {

file {

path => "/var/log/mhn/mhn-json.log"

start_position => "end"

sincedb_path => "/opt/logstash/sincedb"

}

}

filter {

json {

source => "message"

}

geoip {

source => "src_ip"

target => "src_ip_geo"

database => "/opt/GeoLiteCity.dat"

add_field => ["[src_ip_geo][location]",[ "%{[src_ip_geo][longitude]}" , "%{[src_ip_geo][latitude]}" ] ]

}

geoip {

source => "dest_ip"

target => "dest_ip_geo"

database => "/opt/GeoLiteCity.dat"

add_field => ["[dest_ip_geo][location]",[ "%{[dest_ip_geo][longitude]}" , "%{[dest_ip_geo][latitude]}" ] ]

}

}

output {

elasticsearch {

hosts => "127.0.0.1:9200"

index => "mhn-%{+YYYYMMddHH00}"

document_type => "event"

}

}

What has changed?

4a5

> sincedb_path => "/opt/logstash/sincdb"

17,21c18

< add_field => [ "[src_ip_geo][coordinates]", "%{[src_ip_geo][longitude]}" ]

< add_field => [ "[src_ip_geo][coordinates]", "%{[src_ip_geo][latitude]}" ]

< }

< mutate {

< convert => [ "[src_ip_geo][coordinates]", "float"]

---

> add_field => ["[src_ip_geo][location]",[ "%{[src_ip_geo][longitude]}" , "%{[src_ip_geo][latitude]}" ] ]

28,29c25

< add_field => [ "[dest_ip_geo][coordinates]", "%{[dest_ip_geo][longitude]}" ]

< add_field => [ "[dest_ip_geo][coordinates]", "%{[dest_ip_geo][latitude]}" ]

---

> add_field => ["[dest_ip_geo][location]",[ "%{[dest_ip_geo][longitude]}" , "%{[dest_ip_geo][latitude]}" ] ]

32,34d27

< mutate {

< convert => [ "[dest_ip_geo][coordinates]", "float"]

< }

39,41c32

< host => "127.0.0.1"

< port => 9200

< protocol => "http"

---

> hosts => "127.0.0.1:9200"

43c34

< index_type => "event"

---

> document_type => "event"

45a37

>

Now we need to restart Logstash. Check the logfiles for any errors.

sudo supervisorctl start logstash sudo tail /var/log/mhn/logstash.err sudo tail /var/log/mhn/logstash.log

Fixing Kibana

Next is fixing the way Kibana deals with the indexes. First check that you already have data in the Elasticsearch database. The indexes will be auto created once you insert data in the database

curl 'localhost:9200/mhn-*/_search?pretty&size=1'

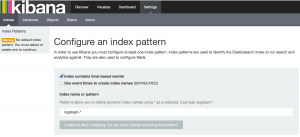

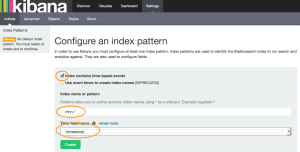

Kibana will prompt you to supply a valid index

Add mhn-* as the index. Make sure that you check the box to indicate that the data is time-based. Then click create.

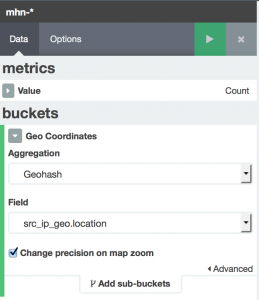

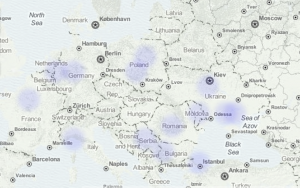

Once all this is done you can now retry to create a geomap. If all went well Kibana will recognise the geo_point field.

Changes to MHN

I’ve been running MHN with a couple of changes. For example enrichment of IP data from certain honeypots with information retrieved from external sources (VirusTotal), extraction of connections from specific networks and ASNs and full request logging via HPFeed for Glastopf.

So far the changes are still work in progress but once everything is finished the scripts will be published on GitHub.

Hi, I want to subscribe for this webpage to get most up-to-date updates, thus where can i do it please help.