Honeypots

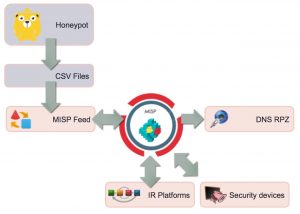

I run a couple of honeypots which allow me to map some of the bad actors and scanners on the internet. The most popular honeypots are Dioanea, Cowrie (ssh, previously kippo) and Conpot (ICS). So far I’ve not really used this honeypot data that much for defensive purposes but a recent writeup on using ModSecurity and MISP gave me inspiration to transform this data into information that I can use as a defender.

The core tool that I will be using is MISP and its feed system to support generating DNS RPZ zones.

MISP Feeds

MISP integrates a functionality called feeds that allows you to fetch directly MISP events from a server without prior agreement. Two OSINT feeds are included by default in MISP (I manage one of those OSINT feeds, botvrij.eu) and can be enabled in any new installation. Providers and partners can provide their own feeds by using the simple PyMISP feed-generator.

Besides using the default MISP format, you can also import feeds in CSV or in a freetext format. For this project I decided to use the CSV format.

Preparing the honeypot data

The first honeypots that I want to tackle are those running Snare. Snare is a web application honeypot, mimicking different real-world web applications. The log output of the default version of Snare is not that easy to process. I added a small change so that Snare now also logs to JSON (see Github). I then use the script snare2blacklist.py below -which runs after the nightly log rotation- to create the CSV file.

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

import json

import time

import datetime

blocklist_json = "/opt/snare/json/snare.json.0"

blocklist_csv = "/var/www/blocklist/data/blocklist-snare.csv"

blocklist_source = "Snare Honeypot"

ts = time.time()

blocklist_timestamp = datetime.datetime.fromtimestamp(ts).strftime('%Y-%m-%d %H:%M:%S')

ip_list = []

f = open(blocklist_json, "r")

for line in f:

line = line.strip()

j_line = json.loads(line)

try:

peer = j_line["peer"]

ip = peer["ip"].strip()

if ip not in ip_list:

ip_list.append(ip)

except:

continue

if ip_list:

f = open(blocklist_csv, "w")

f.write("# %s - Last update : %s" % (blocklist_source, blocklist_timestamp))

for ip in ip_list:

f.write("%s\n" % ip)

The file blocklist-snare.csv is then made available through an internal web server so that an internal MISP instance can fetch it. The next step is then to integrate this data into MISP.

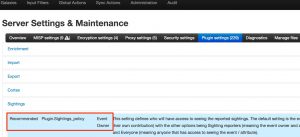

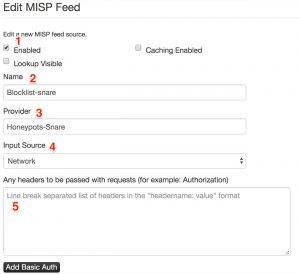

Setting up a custom MISP feed

MISP feeds are available under the menu Sync actions – List feeds. Adding a new feed is done via Add feed in the left menu. You then have to supply a couple of parameters.

- Enable the feed. By default the list will not be enabled;

- Give the feed a descriptive name (free text field);

- Give the description of the provider (free text field);

- The input source, either it is a local source or a network source;

- Any authentication headers that you need to send before you can fetch the feed.

- The URL where MISP can fetch the feed;

- The format of the feed, either MISP format, CSV or freetext;

- Decide if each ‘import’ needs to generate a new event or one single event will be used. For my purpose I will use one single reference event and attach all the attributes (the IPs) to this event;

- Supply an event ID to use. If you do not supply an event ID then MISP will create this event for you;

- Which fields to use from the CSV import;

- Any lines that need to be excluded. The first line in my CSV file is a timestamp prepended with the ‘#’ symbol, obviously this does not need to be included.

- Publish the event data automatically, making it available for other sources;

- Do a delta merge. This is extremely useful if you want to avoid having to many ‘old’ attributes in the event;

- The default distribution level. This information is for internal use only, so I’ll only share it with this community;

- A specific tag that you’d like to add to the import. Using tagging (especially ‘block-or-filter-list’) makes it easy for you to differentiate later between the different event types.

MISP Event creation

Once the feed is configured and the background workers have started to pull in data, a new event will be created. This event will contain the tags and community settings defined in the feed configuration.

The event itself will contain as attribute the IPs that are part of the blocklist. One of the additional benefits of using MISP to store these blocklist is that it gives you an overview of correlation with other events (or botlist).

RPZ creation

Generating the RPZ zone file can then be done manually via “Download as …” and then select the RPZ zone file format.

The manually export is perfect for testing the setup but eventually you want to automate this. This can be done via the automated export functionality of MISP. For generating the RPZ zones you can use a request similar to

https://<url>/attributes/rpz/download/[tags]/[eventId]/